Generative UI Is Expensive and Nobody Knows When to Use It

Google released A2UI in December 2024 and people barely noticed. A few weeks later someone on Reddit posted "Has anyone solved generative UI?" with zero upvotes. That gap tells you everything about where this technology actually is.

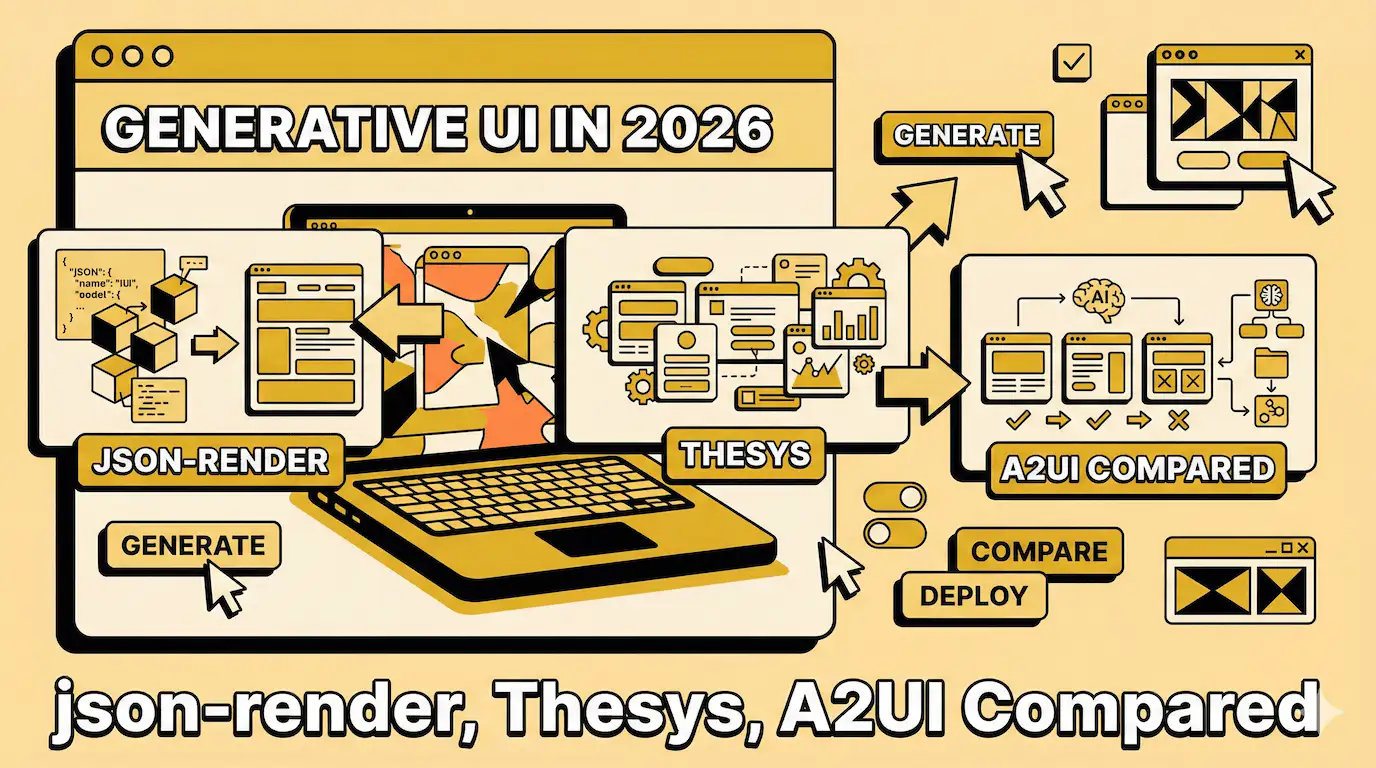

Generative UI is supposed to let AI build your interface in real time. You type "show me a revenue dashboard" and the LLM returns actual components. Cards. Charts. Buttons. Not markdown. Not a wall of text. Real UI.

But here's what nobody talks about. It's expensive. It's inconsistent. And most developers still can't figure out when they actually need it.

Four platforms doing the same thing differently

json-render gives you a catalog. You define components up front using TypeScript and Zod. The AI can only use what you allow. It's guardrails first. Security first. Boring first. Your LLM generates JSON that maps to your React components and nothing else.

Thesys (C1) is an API wrapper. You point your OpenAI client at their endpoint instead. They intercept the response and turn it into UI components. Drop-in replacement. Works with any model. Their pitch is you build AI frontends "10x faster and 80% cheaper" but i haven't seen anyone verify those numbers.

A2UI is a protocol. Google-backed. Open source. Framework agnostic. The idea is your agent sends JSON describing UI and your client renders it with whatever components you want. React, Flutter, Angular, native mobile. Same response works everywhere.

Vercel AI SDK started this. They launched v0.dev last year and open sourced the generative UI tech in February 2024. React Server Components. Streaming. Tool calls. The most mature option and also the one developers complain about most.

One developer on Reddit said the Vercel SDK "remains somewhat unstable which has forced me to refactor several times. It's probably simpler to use the OpenAI SDK on their own". Another called it "the highest-ROI skill for AI beginners". Both are probably right.

The protocol problem

A2UI is verbose. LLMs struggle to generate it correctly. It needs detailed schema knowledge. Component IDs. Surface updates. Literal strings. All that structure makes rendering perfect but authoring painful.

So developers created Open-JSON-UI. Token-efficient. Easy for agents to generate. Low failure rate. But it's not directly renderable. You need a smart client to interpret it.

Here's the comparison that matters:

| Feature | A2UI | Open-JSON-UI |

| Primary Goal | Precise rendering | Easy generation |

| LLM-friendliness | Poor | Excellent |

| Verbosity | High | Low |

| Rendering | Direct | Requires translation |

You can have easy generation or easy rendering. Not both.

Why it costs real money

GPT-4o charges $5 per million input tokens and $15 per million output tokens. GPT-4 Turbo is double that. Every time your LLM generates UI instead of text you're burning tokens on component names, props, layouts, data bindings, and nested children.

A simple dashboard might need 2,000 output tokens. That's $0.03 with GPT-4o. Doesn't sound like much until you have 10,000 users generating custom UIs daily. Now it's $300 a day. $9,000 a month. For UI generation alone.

And that's assuming the LLM gets it right the first time. Spoiler: it doesn't.

One company building with this shipped an AI podcast app to Google Play. Another is building a SaaS tool. Both are probably discovering token costs the hard way.

The anthropic approach

Anthropic released Artifacts in June 2024. Click a button in Claude and it generates code snippets, documents, website designs in a dedicated window. You can edit them in real time.

The Anthropic team built Artifacts using Claude. Alex Tamkin described the process: "I'd describe the UI I wanted for Claude and it would generate the code. I'd copy this code over and render it. I'd then take a look at what I liked or didn't like, spot any bugs, and just keep repeating".

They shipped it in three months.

That's the thing about generative UI. The people building the tools use the tools to build the tools. Recursive. Meta. Occasionally useful.

What 2026 supposedly brings

88% of business leaders are increasing AI budgets for generative UI capabilities. UX designers are calling 2026 "the year we start the shift to Generative UI". Interfaces will "restructure themselves in real-time based on users' intent, mood, and historical behavior".

But the challenges are the same challenges generative AI has always had. Hallucinations become UI bugs. Biases become design decisions. And you need immense processing power to generate unique interfaces for billions of users simultaneously.

Quality control is manual. AI doesn't understand brand aesthetics. There's a learning curve even for developers. And over-reliance on AI kills creativity.

The static interface is dead. Long live the static interface.

When you actually need this

You don't need generative UI for most apps. You need it when:

User intent is unpredictable and the possible UIs are infinite

Personalization matters more than consistency

You're building an agent that needs to show different tools for different tasks

Your users are already chatting with an LLM and you want richer outputs than markdown

If you're building a dashboard where everyone sees the same charts just with different data, skip it. Use regular components. Bind the data. Save the tokens.

If you're building a copilot where the AI might need to show a form, then a chart, then a code editor, then a calendar based on what the user asks for, generative UI starts making sense.

The question isn't "can AI build my UI." The question is "should my UI change shape every time someone asks a question."

The reddit reality check

That post asking "Has anyone solved generative UI?" got one comment. The person wanted to build an infinite canvas for brainstorming. Cards instead of text blocks. Tables and charts instead of paragraphs.

They tried prompting the model to assess output and establish a layout schema before rendering. Nothing worked consistently.

That's where this tech actually is. Not solved. Not settled. Not simple.

But json-render shipped in January 2026. Thesys is taking customers. A2UI has Google's backing and Flutter integration. Vercel's been in production for a year.

The tools exist. They work. Sometimes. When you need them. If you can afford the tokens.

i still think most interfaces should be designed by humans. But the AI is learning. And some interfaces probably should adapt. We'll figure out which ones by building the wrong thing a few hundred times first.

Enjoyed this article? Check out more posts.

View All Posts