Why I Think Small Models Will Win the AI Agent Race

Last tuesday i watched my automated support agent burn through $200 in API calls. It answered maybe 40 questions. Most of them were "what's your return policy" variations.

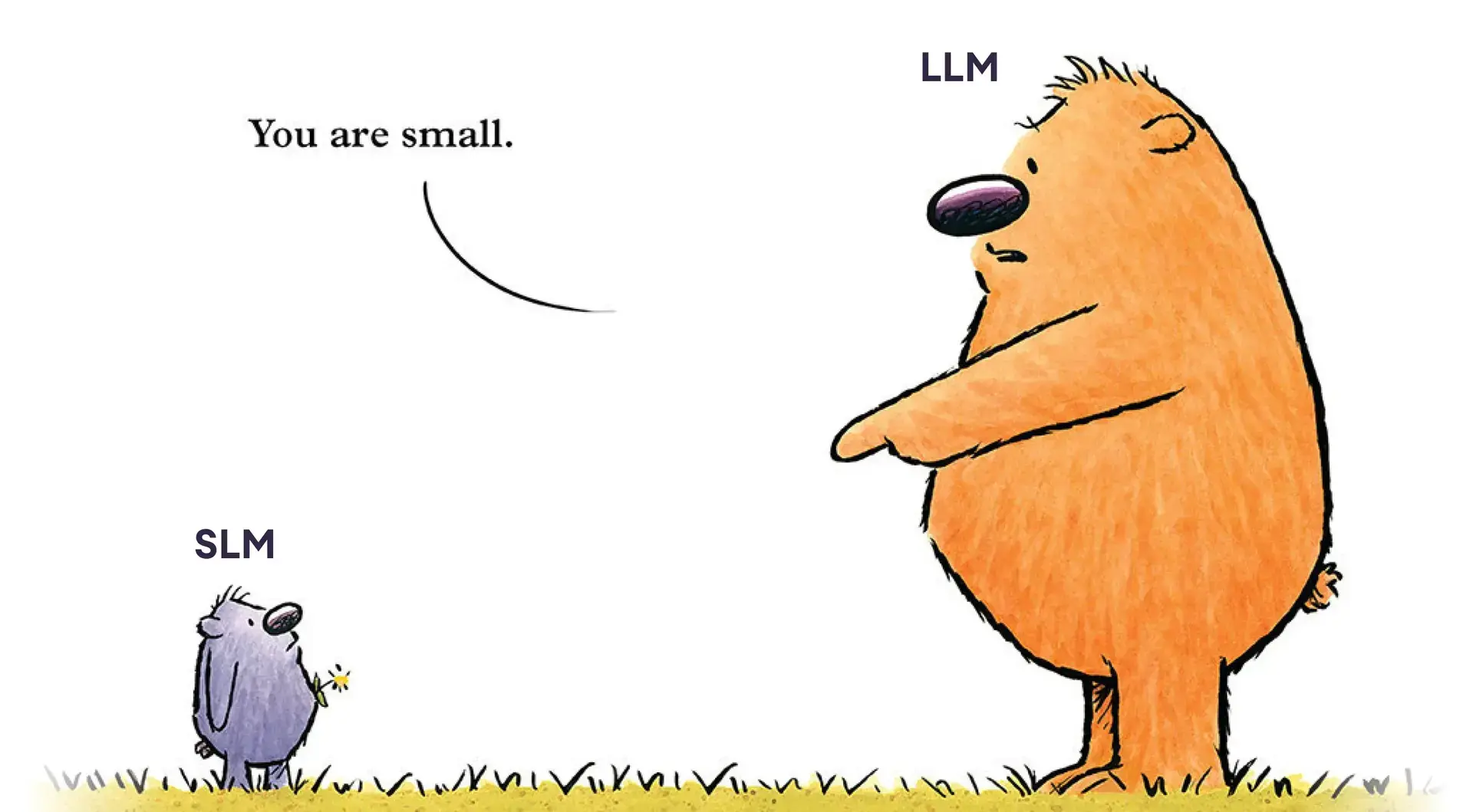

The agent worked fine. But i kept thinking about the model underneath. A massive 175 billion parameter thing processing the same five questions over and over. Like hiring a brain surgeon to check temperatures.

You've probably built something similar. An agent that routes emails or filters spam or generates reports. Works great until you see the bill.

The thing about agents that nobody talks about

Here's what i realized: agents are boring.

Not boring to build. Boring to be. An agent doesn't have interesting conversations. It doesn't write poetry. It runs the same workflow a thousand times. Click here, extract that, format this.

I used to think bigger models meant better agents. The scaling laws said so. More parameters, more intelligence, better results.

But then i read this paper from NVIDIA. Small language models are the future of agentic AI. Not "could be." Not "might work for some cases." The future.

And the argument clicked immediately.

What actually happens inside an agent

Most agents do three things:

parse some input

call a tool or API

return structured output

That's it. No philosophy. No creativity. Just "take this JSON, hit that endpoint, give me back XML."

I looked at my support agent again. Every single query followed the same pattern. User asks question. Agent checks knowledge base. Agent formats response. Done.

Why was i using a model trained on the entire internet for that?

The paper breaks it down. Small models—like 1 to 10 billion parameters—can handle these tasks fine. Microsoft's Phi-2 has 2.7 billion parameters. It runs 15 times faster than the 30 billion parameter models and does code generation just as well.

My friend tried Hymba-1.5B for his scheduling agent. Instructions, tool calls, basic reasoning. It worked. Cost dropped by 95%.

The models got weirdly good

Something happened in the last year. Small models stopped being "budget options."

DeepSeek's R1-Distill-7B beats Claude 3.5 Sonnet on reasoning tasks. A 7 billion parameter model. Beats Claude.

Salesforce's xLAM model does tool calling better than GPT-4. It's 8 billion parameters.

The gap closed. Not completely. But enough that for agent tasks—narrow, repetitive, structured—small models match or beat the big ones.

And they're fast. An agent using a small model responds in milliseconds. Big models take seconds. When you're processing thousands of requests, that compounds.

Why this won't happen (but will)

I talked to a startup founder last week. He said "we're all-in on GPT-4 for our agents."

I asked why.

"Everyone uses it. The infrastructure exists. We don't want to retrain."

Fair. The industry invested billions in LLM infrastructure. Data centers built for serving massive models. APIs designed around them. Inertia is real.

But here's the problem: his API bill was 60% of revenue.

The paper calls this out. The economics don't work. You can't build a sustainable agent business when inference costs eat your margins.

Small models cost 10 to 30 times less to run. You can fine-tune them in hours, not weeks. You can run them on a single GPU. Some run on phones.

The math isn't close.

The random thing about brain size

This is tangential but: i think about mice a lot.

Mice have incredible brain-to-body mass ratios. Better than humans. Their brains are tiny but efficient for what they need to do.

Agents are like mice. They don't need to know everything. They need to do one thing really well, really fast.

We keep building elephant brains for mouse problems.

The paper makes this point better than i can. It talks about galactic-scale intelligence being useless because light-speed delays make communication impossible. Even a super-intelligent system spanning a galaxy couldn't respond fast enough for human use.

Scale matters. But not the way we thought.

Nobody wants to hear this

The honest truth: most agent companies won't switch to small models.

Not because small models don't work. They do. But because:

leadership already committed to LLM infrastructure

engineers know the big model APIs

marketing loves saying "powered by GPT-4"

retraining feels risky

Also: LLMs are impressive. They can write essays and hold conversations. Small models feel... small.

But agents don't need to be impressive. They need to be reliable. And cheap.

What i'm doing now

I rebuilt my support agent. Used a 7 billion parameter model fine-tuned on my actual support conversations.

It took six hours to set up. Cost $40 to train. Now runs for pennies.

Response quality? Same. Sometimes better because it's not overthinking. Speed? Way faster.

Last month's bill: $8.

Forty tickets answered for $8. Compare that to tuesday.

The thing about tools

One more thing the paper gets right: agents use tools.

When an agent needs to calculate something complex, it calls a calculator. Needs to search? Calls a search API. Needs up-to-date info? Calls a database.

The language model is just the glue. It parses, routes, formats.

You don't need a massive model for glue.

Most of my agent's intelligence comes from its tools, not the model. The model just needs to know which tool to call and how to format the request.

A small model does this fine.

Where i'm probably wrong

I think small models will dominate agents. But maybe i'm wrong about the timeline.

Maybe the infrastructure shift takes five years, not two. Maybe there's a breakthrough in LLM efficiency that changes everything. Maybe enterprises stay committed to big models because that's where the talent is.

Also: some agents really do need big models. Customer service agents that handle truly novel situations. Research agents exploring unknown domains. Creative agents generating marketing content.

Heterogeneous systems make sense. Small models handle routing and simple tasks. Big models handle the weird edge cases.

But most agents? They're doing the same thing over and over.

The ending i didn't plan

I still think about that tuesday. $200 for 40 questions.

Should've switched earlier. But at least the math is clear now.

Small models aren't worse. They're different. Purpose-built. Efficient.

The future of agentic AI isn't one massive model doing everything. It's dozens of small models, each specialized, each fast, each cheap.

We're just slow to accept it.

Your move.

Enjoyed this article? Check out more posts.

View All Posts